Using Human-Centered Design with SAFe

Using Human-Centered Design with SAFe

By Joseph Montalbano, SPC, and Brad Lehman

Authors of Human-Centered Agile: How to Build Next-Generation Agile Programs Using Human-Centered Design

Note: This article is part of the Community Contributions series, which provides additional points of view and guidance based on the experiences and opinions of the extended SAFe community of experts.

概要

As Human-Centered Design (HCD) gains wider Enterprise adoption, many organizations are combining HCD with SAFe to get a “best of both worlds” implementation and are achieving striking results as they do so. Over the past two years the authors, an SPC and an HCD practitioner, have successfully integrated Human-Centered Design methods and mindset into multiple mature SAFe programs, including a Large Solution, at a large government agency and the impact has been tremendous. In this paper—selections from our book on our experiences, forthcoming from Productivity Press—we will discuss lessons learned from these implementations and some of the ways that HCD has helped us improve quality, deliver more value faster, and have even more robust SAFe implementations.

Our experience has shown that a blended HCD/SAFe approach can sometimes, though not always, be used to obtain user insights—valuable understanding of user attitudes, behaviors, and expectations—faster and cheaper than by relying exclusively on feedback from released functionality. A blended HCD/SAFe approach can also dramatically increase quality and end-user satisfaction. It can, however, be a challenge because these professions have different mindsets and sometimes struggle to speak to one another as a result: as a generalization, Agilists think and work in the solution space while HCD practitioners think and work in the problem space (although this might have been truer prior to the inclusion of Design Thinking and Customer Centricity in SAFe 5.0).

This integration also presents a planning challenge, as HCD practitioners need to plan their work just a little bit ahead of the development teams. To use a car metaphor, they need to be able to see just a little farther than the headlights.

What Do We Mean by Human-Centered Design?

Design is the intentional creation of a specific experience or outcome.

That’s it. It doesn’t matter whether the Solution being developed is a website or a chair, an experience is being created for the person using the Solution. When design is discussed, especially for software, people most often start thinking about visual design—the color palette, pictures, and balance of elements. Visual design is one aspect of design, but additional aspects of design are vital to good software development—interaction design, information architecture, content strategy—and that doesn’t even cover the research and testing activities that have now become synonymous with “designing a user experience.”

Human-Centered Design is a design methodology that seeks to align business goals with user needs by keeping the user (the human) in the frame throughout the Solution development lifecycle. This requires:

- Human-Centered Discovery

- Human-Centered Ideation and Concept Validation

- Human-Centered Solution Validation, Refinement, and Evaluation

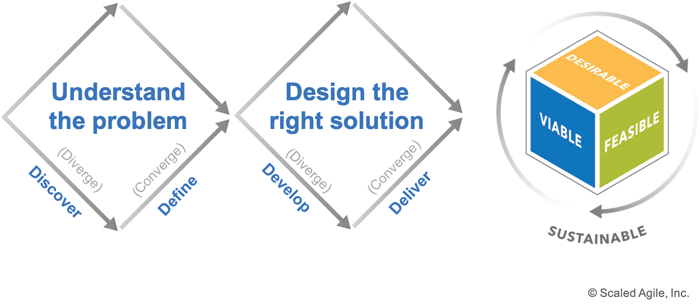

SAFe’s visualization of Design Thinking (Figure 1), emphasizes understanding the problem, the solution context, and the evolution of Solutions. The first diamond represents the problem space, and the second diamond represents the solution space. As it turns out, this is also a good visualization of HCD thinking and methods, demonstrating the synergy between the two disciplines.

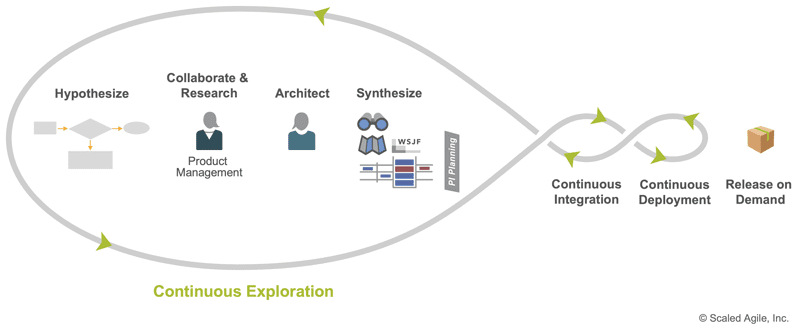

There is a natural overlap between HCD methods and thinking and SAFe’s concepts of Customer Centricity, Design Thinking (see figure above), Lean UX, BDD, the Measure and Learn activities that are a part of Release on Demand, as well as with the Hypothesize, Collaborate & Research, Architect, and Synthesize activities that are at the heart of Continuous Exploration. Given their natural commonalities and synergies, combining these two mindsets and approaches is a potentially powerful option that practitioners should consider.

HCD Activities in Continuous Exploration

One of the early benefits of adding HCD practitioners to your SAFe implementation is that teams will start getting better stories with better Acceptance Criteria by applying HCD methods and thinking. HCD practitioners will challenge the team to take a full inventory of what they know they know versus what they think they know. This typically happens with a series of “Can we satisfactorily answer…” questions. Those answered in the negative are candidates for future Exploration. HCD practitioners will be instrumental in ensuring not only that stories have strong Acceptance Criteria, but also that the “why” (the “so that…” part of the story) is as well understood by the team as well as the “how.” HCD practitioners who might be unfamiliar with BDD will quickly become BDD experts and stories start getting stronger almost immediately. The pattern that we have observed most often is that Backlog Refinement takes longer, but the results are better stories and a more robust shared understanding and commitment. We think that’s a great tradeoff.

In Figure 2, we see the four activities that are a part of Continuous Exploration. HCD can impact all four but tends to have the greatest impact on Hypothesize and Collaborate & Research.

While Continuous Exploration activities were conducted on these programs prior to the addition of the HCD skillset, our experience is that the addition of people who specialize in HCD and are dedicated to this task enhances these activities tremendously and surrounds them with a whole new rigor. Specifically, our HCD teams started doing in-person user interviews (not surveys) and focus groups which were a source of super high-quality insight. They did user observation (pre-Covid), an activity called “contextual inquiry.” They gathered additional user sentiment by reviewing support tickets and employee engagement surveys. They synthesized all this high-quality insight into Personas (which often include scenarios) that describe how we understand a class of users’ needs, pain points, and desires. Journey Maps are created to collect similar insights along a more longitudinal process, and Empathy Maps are created to compare different groups facing the same problem and determine opportunities for new features. These artifacts ensure teams have the necessary tools to consider not just the functional needs of end-users, but their social and emotional needs as well.[1]

Once this happens a beautiful transformation begins to take place… teams start to rethink and organically evolve the way they plan their work, front-loading their thinking with HCD activities. They are more deliberately practicing Design Thinking and they certainly have a more Customer-Centric mindset.

Teams get the “rhythm” of the HCD work and developers learn how to “dance” with HCD practitioners as they plan. Instead of relying entirely on user feedback coming from value delivered, the team now starts to get at least some of this user insight before they deliver anything, making their deliveries stronger from the first Iteration. Teams Take an Economic View and learn how to make conscious decisions about which method is fastest, cheapest, and best for obtaining insight for their team and in their local context. HCD thinking and methods are integrated into everything, not isolated in pockets or silos. They start delivering like never before. They are beginning to practice what we have come to call “Human-Centered Agile.”

As word of the high levels of innovation and productivity delivered by these “Human-Centered Agile” teams spreads, demand for limited HCD capacity increases. Our experience was that requests for HCD skills start to come from other ARTs and beyond. The teams now struggle with how to manage demand and plan this work.

Planning HCD Work: The Architectural Runway and the “Research Roadmap”

We have observed that teams often have difficulty planning this work because HCD practitioners look beyond the current Iteration to plan and their work is sometimes not consumed in the current Iteration. That feels “un-Agile” to many teams. The Architectural Runway is the construct that SAFe uses for visualizing the Compliance Enablers, Architecture Enablers, Infrastructure Enablers, and Exploration Enablers that must be coordinated and executed to keep the ART moving fast. Exploration Enablers are at the heart of HCD work, though it should be noted that not all Exploration Enablers involve HCD work.

In our case, the Architectural Runway metaphor just didn’t resonate with our HCD teams. They felt like their work “didn’t fit” with the mostly technical work there. The teams wanted a different construct to visualize this HCD work, work that was in their minds different. We needed a metaphor to help these teams Apply Systems Thinking to the planning of exploratory HCD work that needs to be done before development work and give them a mental model to aid in planning work just a little further into the future than they were accustomed to. Not only did this new construct have to work, but it also had to function within the SAFe framework without violating it, and it had to be acceptable to the HCD teams. The teams were used to using Roadmaps so this seemed like a good place to start. We came up with the idea of a “Research Roadmap” to visualize and think about the Exploration Enablers the HCD practitioners will be working on.

In Figure 3, the Research Roadmap is shown in the white box. We see HCD Exploration Enablers to support a single Feature. All this work is contained in the Architectural Runway with Compliance Enablers, Infrastructure Enablers, and Architectural Enablers, this is just a construct to help teams plan HCD work just a little bit ahead of development work.

This Research Roadmap, driven by the Features in the current and upcoming PIs, helped our teams plan HCD work using a lightweight model that they were already familiar with. This can be thought of as the HCD Exploration Enabler Features in the Architectural Runway, and the idea, of course, is to get this Research Runway built just-in-time like laying tracks for the ART just as the train is coming. This resonated with our HCD teams and made them feel more involved in the process which led to them being more fully engaged in planning. The Research Roadmap doesn’t change the existing AR or the responsibilities of the System or Solution Architect for prioritizing the AR, it’s just a way to visualize the HCD portion of the AR work. Coordination between the Architect community and the HCD team is still essential to prioritizing and planning AR work.

We have learned that planning this work is harder than it looks and should be done with great care. Our advice to teams is not to let early difficulties deter you from this endeavor. This takes some practice. Finally, note that there is a great temptation for teams to turn the Research Roadmap into a Research Queue. Practitioners are urged to stay vigilant to ensure this doesn’t happen.

Team Topology: Which Teams Do the HCD Work?

Armed with the new construct of the Research Runway, we turned our focus to who will be doing this work so we could plan it better. After multiple experiments with different team topologies, we found that having one team of HCD specialists on the ART and having that work planned effectively works the best. It is certainly nice to also have some of these skills at the team level, but these skills can be grown at the team level over time by the HCD team through pair work, mentorship, and training as part of the Team Learning activity as described in the Continuous Learning Culture competency.

We have come to think of Lean UX not only as a “mindset, culture, and process…” but also as an ART-level Shared Services team. This Lean UX/HCD team concentrates on the Research Roadmap. Finding the right PO for this team can be tricky, especially if you are adding this skill set for the first time and don’t have an existing depth of talent in that area.

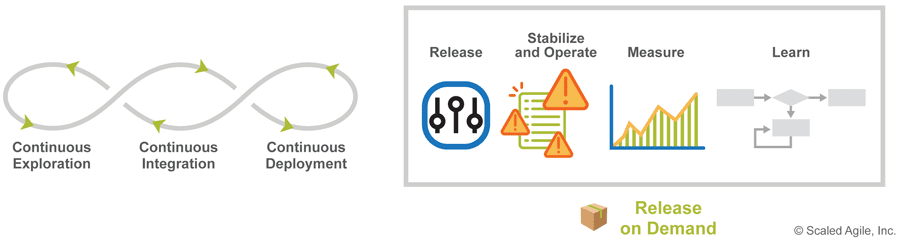

Measure and Learn: HCD Drives “Continuous Evaluation” with Quantitative and Qualitative Methods

Our HCD practitioners brought new tools and rigor to the way we conduct the Measure and Learn activities listed in Release on Demand (Figure 4), though they prefer to think of it as “Continuous Evaluation.” The results of both the post-release Quantitative Evaluation and Qualitative Evaluation that HCD teams conduct are brought into subsequent Iteration Planning, Backlog Refinement, and PI Planning. Frequently this insight will generate new Exploration Enablers for the Research Runway along with new Features and Stories for development. Sample activities are listed in the table below.

| Quantitative Evaluation | Qualitative Evaluation |

| Evaluate True North Metrics | Asynchronous Sentiment Gathering and Analysis (e.g., Surveys, Net Promoter Scores, Diary Studies, etc.) |

| Evaluate Signal Metrics | Contextual Evaluation / Observation of usage |

| Evaluate Leading Indicators | In-Person Sentiment Gathering (interviews, focus groups, etc.) |

| Digital Ethnography / External Community sentiment gathering (e.g., user communities, social media measurement, etc.) |

The Quantitative Evaluation and Qualitative Evaluation activities listed in the table are critical because a Feature in the wild always performs at least a little differently than expected when it was validated in a controlled environment, as do users. These activities and the review of the insight they generate on cadence allow us to ensure that we are effectively learning from each release and that we improve relentlessly. This also helps us Continually Evolve Live Systems, as described in the Enterprise Solution Delivery competency.

Our HCD teams have added a level of holistic Solution-level evaluation to what had previously been Feature-level evaluation. Solution satisfaction is rarely the sum of that Solution’s parts, and this is especially true when considering the qualitative and emotional impact that can lead to Solution adoption and loyalty on one hand, or frustration and abandonment on the other. It is key that Quantitative and Qualitative evaluation go hand-in-hand because quantitative measures give you the “what”—you can see behaviors at scale, but not the “why.” Qualitative measures, by contrast, will give you a sense of how users are thinking, and why they are responding as they do, but cannot give you a direct measure of actual performance. This area was considered “low hanging fruit” by our HCD team and they were able to make dramatic improvements in this area very quickly. These improvements were like an engine powering Customer Centricity with a steady stream of sentiment, insight, and new ideas.

Conclusion

Integrating teams of HCD practitioners into mature programs presents great opportunities for organizations but introduces some challenges for practitioners, specifically around team formation and topology and its impact on planning. Teams will struggle to plan this work for a few PIs, but in our case, it was well worth the effort in the end. We have been able to measure higher quality, improved end-user sentiment, and more value delivered faster. We encourage practitioners who may be struggling to integrate and plan this work, as we were, to float the idea of the Research Roadmap to their teams and see if they are willing to give it a try. It worked like magic for us. Finally, use your new HCD skills not only to enhance all the activities in Continuous Exploration but also to enhance the Measure and Learn activities in Release on Demand with HCD-driven Quantitative and Qualitative Evaluation activities. We expect that you will be surprised by the results.

The authors would like to thank David Tilghman, James Sperry, and Jeff Nichols for kindly reviewing and critiquing this article for us.

- Marketing Malpractice: The Cause and the Cure, by Clayton M. Christensen, Scott Cook, and Taddy HallHarvard Business Review, December 2005 https://hbr.org/2005/12/marketing-malpractice-the-cause-and-the-cure ↑